JSON Import - Advanced API Data Processing

Data Import

JSON Import is a sophisticated Excel add-in feature that transforms how you work with JSON data by providing intelligent parsing, automatic flattening, and flexible import options. Whether you're importing API responses, processing web data, or analyzing JSON files, this tool converts complex nested data into Excel-friendly formats with full control over the import process. Instead of manual JSON processing or complex transformations, you get instant conversion of JSON data into structured Excel tables.

Key Benefits

How to Use

Basic JSON Import Process

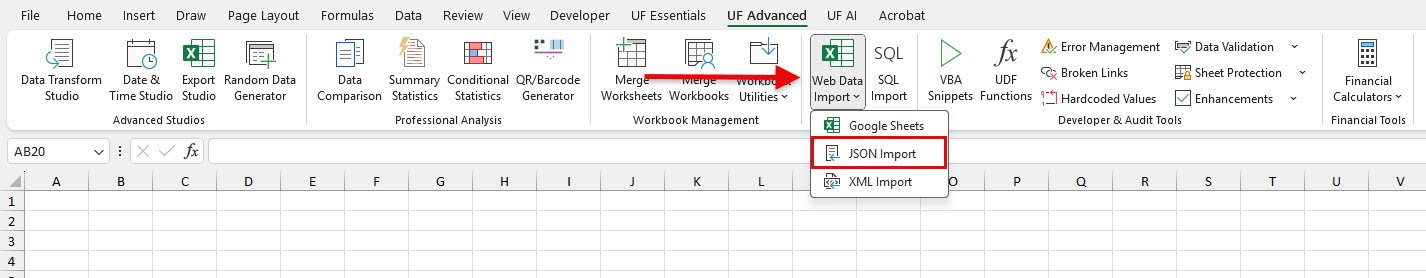

- Go to UF Advanced tab → Data Import & Export group

- Click Web Data Import → JSON Import

- Choose your JSON source:

- File: Browse and select JSON file

- URL: Enter API endpoint or JSON URL

- Text: Paste JSON content directly

- Configure import options (flattening, arrays, etc.)

- Click Preview to see processed data

- Click Import to bring data into Excel

Advanced JSONPath Queries

- Enter your JSON source (file, URL, or text)

- Specify JSONPath expression to extract specific data

Examples: $.users[*], $.data.products, $..price

- Preview results to verify extraction

- Import targeted data directly to Excel

Examples

Example 1: API Response Processing

Scenario: Processing customer data from a REST API response.

JSON Structure:

{

"customers": [

{"id": 1, "name": "John Doe", "email": "john@example.com", "address": {"city": "New York", "zip": "10001"}},

{"id": 2, "name": "Jane Smith", "email": "jane@example.com", "address": {"city": "Los Angeles", "zip": "90210"}}

]

}Implementation:

- Enter API URL in JSON Import

- Use JSONPath: $.customers[*] to extract customer array

- Enable "Flatten Nested Objects" for address data

- Result: Excel table with columns: id, name, email, address.city, address.zip

Example 2: E-commerce Product Analysis

Scenario: Analyzing product data from an e-commerce API for inventory management.

Implementation:

- Import from URL: https://api.store.com/products

- Use JSONPath: $.products[*] to get product array

- Enable "Include Array Index" to track product order

- Set array separator to "; " for multi-value fields

- Result: Structured product catalog ready for Excel analysis

Example 3: Social Media Analytics

Scenario: Processing JSON export from social media analytics containing nested engagement data.

Implementation:

- Import JSON file from social media platform

- Use JSONPath: $.posts[*].engagement to focus on engagement metrics

- Flatten nested objects to get likes, shares, comments as separate columns

- Result: Engagement analysis ready for Excel charts and pivot tables

Advanced Configuration

JSONPath Query Syntax

- Root Element: $ represents the root of the JSON document

- Array Access: $.users[*] selects all items in the users array

- Nested Properties: $.data.products accesses nested properties

- Recursive Descent: $..price finds all price properties at any level

- Conditional Selection: $.users[?(@.active)] selects active users only

Flattening Options

- Nested Object Flattening: Converts complex nested structures to columns

- Dot Notation: Uses dot notation for nested property names (e.g., address.city)

- Array Handling: Converts arrays to delimited strings or separate rows

- Depth Control: Limits flattening depth for complex structures

- Type Preservation: Maintains data types during flattening process

Import Customization

- Array Index Inclusion: Optionally includes array indices in output

- Custom Array Separators: Choose how array values are joined (comma, semicolon, etc.)

- Header Generation: Automatic creation of meaningful column names

- Data Type Detection: Automatic recognition and conversion of data types

Troubleshooting

Invalid JSON errors

- Verify JSON syntax is correct using online validators

- Check for missing quotes, brackets, or commas

- Ensure proper character encoding for international data

Empty results from JSONPath

- Verify JSONPath expression matches JSON structure

- Check JSONPath syntax using online testers

- Remember that JSONPath expressions are case-sensitive

- Ensure array indexing starts at 0

Memory issues with large files

- Use JSONPath to extract only needed data

- Process large JSON files in smaller batches

- Close other applications when processing very large files

- Consider splitting large JSON files before import

Flattening problems

- Adjust flattening depth for complex structures

- Review data types and formatting after import

- Use appropriate array separators for your analysis needs

- Test flattening options with sample data first

- Validate JSON syntax before import to avoid processing errors

- Use JSONPath queries to import only the data you need for better performance

- Always preview data structure before importing to verify results

- Understand your JSON structure to plan the most effective import strategy

- Use appropriate flattening options based on data complexity

- Limit flattening depth for very complex nested structures

- Test with small samples before processing large JSON files

- Leverage JSONPath to extract targeted data and reduce processing time

- Use consistent approaches for similar JSON structures across projects

Common Use Cases

API Data Integration

- REST API Consumption: Import data from web APIs for analysis

- Webhook Data Processing: Process JSON webhook payloads

- Multi-endpoint Aggregation: Combine data from multiple JSON sources

- Real-time Data Analysis: Import live JSON data for current analysis

Business Intelligence

- Analytics Dashboard Feeding: Import JSON analytics data for dashboards

- Performance Monitoring: Process JSON logs and metrics

- Customer Data Analysis: Analyze customer data from JSON CRM exports

- Financial Data Processing: Import JSON financial data for analysis

Data Transformation

- ETL Processes: Extract, transform, and load JSON data into Excel

- Data Normalization: Convert complex JSON structures to normalized tables

- Report Automation: Automate report generation from JSON data sources

- Data Validation: Use Excel features to validate imported JSON data

Frequently Asked Questions

The limit depends on your system memory, but the tool is optimized for large files. Use JSONPath queries to reduce data volume if needed.

Currently, the tool supports publicly accessible JSON URLs. For authenticated APIs, download the JSON first and import as a file.

Arrays can be converted to delimited strings (comma-separated values) or processed as separate rows, depending on your configuration.

While not automatically saved, you can document your JSONPath expressions for consistent reuse across similar imports.

The tool supports standard JSONPath syntax including filters, recursive descent, and array operations.

Related Documentation

Google Sheets Import - Public Sheet Data Integration

Import data from public Google Sheets directly into Excel with Google Sheets Imp...

Read DocumentationXML Import - Advanced Structured Data Processing

Transform XML data into Excel with XML Import's powerful parsing, XPath queries,...

Read DocumentationSQL Import - Database Integration & Query Tool

Connect Excel to databases with SQL Import. Execute SQL queries, import data fro...

Read Documentation