AI Data Validation - Quality Assessment Tool

AI Data Preparation

AI Data Validation performs comprehensive quality assessment of your Excel datasets to ensure they meet professional standards for AI and machine learning projects. It identifies data quality issues, structural problems, and provides specific recommendations for improvement, helping you create reliable, high-quality datasets for successful ML model training.

Key Benefits

How to Use

Running Validation

- Select Your Dataset: Highlight the complete Excel range including headers

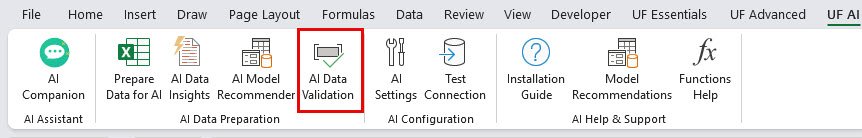

- Launch Validation: Go to UF Advanced tab → AI Tools → Validate Data for AI

- Review Results: System displays comprehensive validation report

- Address Issues: Use recommendations to improve data quality

- Re-validate: Run validation again after making improvements

Understanding Validation Results

The validation report categorizes issues by severity:

Critical Issues (Must Fix)

- Completely Empty Columns: Columns with no data that should be removed

- Inconsistent Row Structure: Rows with different numbers of columns

- Duplicate Headers: Column names that appear multiple times

Quality Warnings (Should Address)

- High Missing Value Rates: Columns with >50% missing values

- High Cardinality: Columns with >95% unique values in large datasets

- Small Dataset Size: Datasets with <100 rows for ML training

Informational (Consider)

- Moderate Missing Values: Columns with 20-50% missing values

- Duplicate Rows: Exact duplicate entries in the dataset

- Dataset Size Warnings: Datasets with <50 rows (very small)

Key Features

Comprehensive Quality Checks

- Structural Validation: Ensures consistent row and column structure

- Completeness Analysis: Identifies missing values and empty columns

- Consistency Assessment: Validates data format and type consistency

- Duplicate Detection: Finds duplicate rows and headers

- Size Adequacy: Evaluates dataset size for ML training requirements

Critical Issue Detection

- Empty Columns: Identifies completely empty columns that provide no value

- Inconsistent Structure: Detects rows with varying column counts

- High Missing Value Rates: Flags columns with excessive missing data (>50%)

- Duplicate Headers: Finds duplicate column names that could cause processing errors

- Data Type Conflicts: Identifies columns with mixed or inconsistent data types

ML Readiness Assessment

- Training Suitability: Evaluates if dataset is suitable for ML model training

- Size Requirements: Checks if dataset meets minimum size requirements (100+ rows recommended)

- Quality Thresholds: Assesses against professional ML data quality standards

- Preprocessing Needs: Identifies required data cleaning and preparation steps

Validation Categories

Structural Integrity

- Column Count Consistency: All rows must have the same number of columns

- Header Validation: Column names must be unique and non-empty

- Data Boundaries: Proper start and end of data ranges

Data Completeness

- Missing Value Analysis: Percentage of missing values per column

- Empty Column Detection: Columns with no data across all rows

- Sparse Data Identification: Columns with very low data density

Data Consistency

- Format Consistency: Consistent data formats within columns

- Type Consistency: Consistent data types within columns

- Encoding Consistency: Proper character encoding throughout dataset

Dataset Adequacy

- Size Requirements: Minimum row counts for reliable ML training

- Feature Adequacy: Sufficient number of meaningful columns

- Target Variable Presence: Identification of potential target variables

Quality Scoring System

Overall Quality Assessment

The validation provides an overall quality assessment:

- ✓ Excellent: No critical issues, minimal warnings

- ⚠ Good: Minor issues that don't prevent ML use

- ⚠ Fair: Some issues that should be addressed

- ❌ Poor: Critical issues that must be fixed before ML use

Specific Quality Metrics

- Completeness Score: Percentage of non-missing data

- Consistency Score: Structural and format consistency

- Uniqueness Score: Ratio of unique to total rows

- Overall Quality: Weighted combination of all metrics

Common Issues and Solutions

Critical Issues

Empty Columns

- Problem: Columns with no data provide no value for ML

- Solution: Remove empty columns from dataset

- Prevention: Clean data before analysis

Inconsistent Row Structure

- Problem: Rows with different column counts cause processing errors

- Solution: Ensure all rows have the same number of columns

- Prevention: Use consistent data entry practices

Duplicate Headers

- Problem: Multiple columns with the same name cause confusion

- Solution: Rename duplicate columns with unique identifiers

- Prevention: Use descriptive, unique column names

Quality Warnings

High Missing Value Rates

- Problem: Columns with >50% missing values may not be useful

- Solution: Consider imputation strategies or remove problematic columns

- Prevention: Improve data collection processes

High Cardinality

- Problem: Columns with too many unique values may not be useful for ML

- Solution: Group similar values or use embedding techniques

- Prevention: Design appropriate categorical variables

Small Dataset Size

- Problem: Datasets <100 rows may not provide reliable ML results

- Solution: Collect more data or use data augmentation techniques

- Prevention: Plan for adequate sample sizes

Best Practices

Pre-Validation Preparation

- Clean Obvious Issues: Fix apparent problems before running validation

- Standardize Formats: Ensure consistent date, number, and text formats

- Remove Test Data: Exclude test entries and placeholder data

- Verify Headers: Ensure column names are descriptive and unique

Post-Validation Actions

- Prioritize Critical Issues: Address critical problems first

- Document Changes: Keep records of data cleaning actions

- Re-validate: Run validation again after making changes

- Plan Preprocessing: Use results to plan ML preprocessing steps

Ongoing Quality Management

- Regular Validation: Validate data quality regularly as new data arrives

- Quality Monitoring: Track quality metrics over time

- Process Improvement: Use validation results to improve data collection

- Team Training: Educate team members on data quality best practices

Common Use Cases

ML Project Preparation

- Pre-Training Validation: Ensure data quality before model training

- Data Pipeline Quality: Validate data at each stage of processing

- Production Readiness: Confirm datasets meet production standards

Data Governance

- Quality Standards: Establish and maintain data quality standards

- Compliance Checking: Ensure data meets regulatory requirements

- Audit Preparation: Document data quality for audits

Business Intelligence

- Report Reliability: Ensure data quality for accurate reporting

- Analysis Preparation: Validate data before business analysis

- Decision Support: Provide confidence in data-driven decisions

Integration with Other AI Tools

Workflow Integration

- Validate First: Always run validation before other AI tools

- Address Issues: Fix critical problems identified by validation

- Generate Insights: Use AI Data Insights for detailed analysis

- Prepare for ML: Use Prepare Data for AI for export

- Get Recommendations: Use AI Model Recommender for model selection

Quality-Driven Workflow

- Validation → Insights → Preparation → Modeling

- Each step builds on the quality foundation established by validation

Frequently Asked Questions

Validate whenever you receive new data or make significant changes to existing datasets.

Address critical issues first, then work on warnings. Some issues may be acceptable depending on your use case.

Yes, but be aware of potential impacts on model performance and plan appropriate preprocessing.

Consider grouping similar values, using embedding techniques, or removing the column if it's not essential.

Related Documentation

Prepare Data for AI - ML-Ready Dataset Export

Transform Excel data into production-ready AI datasets with automated export, da...

Read DocumentationAI Data Insights - Comprehensive Dataset Analysis

Generate detailed data insights with column analysis, data types, quality metric...

Read DocumentationAI Model Recommender - ML Model Selection Guide

Get intelligent ML model recommendations based on your data characteristics, pro...

Read Documentation