AI Model Recommender - ML Model Selection Guide

AI Data Preparation

AI Model Recommender analyzes your Excel dataset and provides intelligent machine learning model recommendations based on data characteristics, problem type, and dataset size. It suggests appropriate models, tools, implementation approaches, and provides realistic effort estimates to guide your ML project planning and execution.

Key Benefits

How to Use

Getting Recommendations

- Select Your Dataset: Highlight the complete Excel range including headers

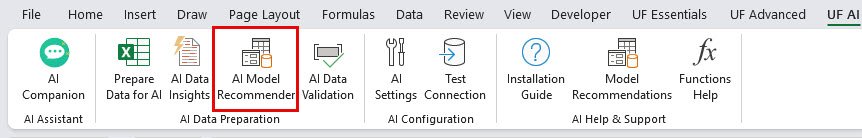

- Launch Recommender: Go to UF Advanced tab → AI Tools → AI Model Recommender

- Choose Problem Type: Select from available options or use Auto-Detect

- Review Recommendations: System displays comprehensive recommendations in detailed dialog

- Plan Implementation: Use recommendations to guide your ML project planning

Problem Type Selection

Classification

- Use For: Predicting categories or classes

- Examples: Spam detection, customer segmentation, image recognition

- Data Requirements: Categorical target variable

Regression

- Use For: Predicting continuous numerical values

- Examples: Sales forecasting, price prediction, risk scoring

- Data Requirements: Numerical target variable

Clustering

- Use For: Finding patterns and groups in data

- Examples: Customer clustering, market segmentation, anomaly detection

- Data Requirements: No target variable needed

Time Series

- Use For: Temporal data analysis and forecasting

- Examples: Trend forecasting, seasonal analysis, demand planning

- Data Requirements: Time-based data with temporal patterns

Auto-Detect

- Smart Analysis: Examines data structure and column names

- Pattern Recognition: Identifies likely problem type based on data characteristics

- Fallback Option: Defaults to Classification if unclear

Key Features

Intelligent Problem Type Detection

- Auto-Detection: Automatically identifies problem type based on data structure

- Manual Selection: Choose from Classification, Regression, Clustering, Time Series, or Anomaly Detection

- Context Analysis: Examines column names and data patterns for intelligent suggestions

- Flexible Options: Override auto-detection with manual problem type selection

Comprehensive Model Recommendations

- Model Suggestions: Specific ML models tailored to your problem type and dataset size

- Complexity Assessment: Models rated by complexity, accuracy, and interpretability

- Tool Recommendations: Suggested frameworks and tools based on data characteristics

- Implementation Guidance: Detailed next steps and implementation roadmap

Professional Project Planning

- Effort Estimation: Realistic timeline estimates based on data complexity

- Resource Requirements: Guidance on computational and skill requirements

- Next Steps: Detailed roadmap from data preparation to model deployment

- Best Practices: Industry-standard approaches for your specific use case

Recommendation Components

Model Suggestions

The system provides model recommendations based on dataset characteristics:

For Small Datasets (<1,000 rows)

- Random Forest: Low complexity, high accuracy, medium interpretability

- Logistic Regression: Low complexity, medium accuracy, high interpretability

- SVM: Medium complexity, high accuracy, low interpretability

For Medium Datasets (1,000-10,000 rows)

- XGBoost: Medium complexity, very high accuracy, medium interpretability

- Neural Networks: High complexity, very high accuracy, low interpretability

- LightGBM: Medium complexity, high accuracy, medium interpretability

For Large Datasets (>10,000 rows)

- Deep Learning: High complexity, very high accuracy, low interpretability

- Ensemble Methods: Medium complexity, very high accuracy, medium interpretability

- AutoML: Low complexity, high accuracy, medium interpretability

Tool Recommendations

Based on dataset size and complexity:

Small to Medium Datasets

- Scikit-learn: Python's premier ML library

- R: Statistical computing and graphics

- Excel Power Query: For simple models and data preparation

Large Datasets

- Python (Pandas): Data manipulation and analysis

- Apache Spark: Big data processing

- H2O.ai: Scalable machine learning platform

Specialized Tools

- Time Series: Prophet, Statsmodels

- Deep Learning: TensorFlow, PyTorch

- AutoML: H2O AutoML, AutoSklearn

Implementation Roadmap

The system provides a detailed next steps guide:

- Data Quality Improvement (if quality score <70%)

- Data Splitting: Create train/validation/test sets

- Exploratory Data Analysis: Understand data patterns

- Feature Engineering: Create and select relevant features

- Baseline Model: Start with simple model for comparison

- Advanced Models: Experiment with recommended models

- Hyperparameter Tuning: Optimize model performance

Effort Estimation

Complexity Factors

The system considers multiple factors for effort estimation:

- Dataset Size: Larger datasets require more processing time

- Column Count: More features increase complexity

- Problem Type: Some problems (Time Series, Anomaly Detection) are inherently more complex

- Data Quality: Poor quality data requires additional preprocessing

Effort Categories

- Low (1-2 days): Simple datasets with standard problem types

- Medium (3-5 days): Moderate complexity with some challenges

- High (1-2 weeks): Complex datasets or specialized problem types

- Very High (2+ weeks): Large, complex datasets with multiple challenges

Best Practices

Effective Model Selection

- Start Simple: Begin with recommended baseline models before trying complex approaches

- Consider Interpretability: Balance accuracy with explainability based on business needs

- Validate Thoroughly: Use proper train/validation/test splits for reliable evaluation

- Iterate Gradually: Build complexity incrementally rather than jumping to advanced models

Implementation Strategy

- Follow Roadmap: Use the provided next steps as a structured implementation guide

- Resource Planning: Consider computational requirements and team skills

- Tool Selection: Choose tools based on team expertise and infrastructure

- Timeline Management: Use effort estimates for realistic project planning

Common Use Cases

Business Analytics

- Customer Segmentation: Clustering models for market analysis

- Sales Forecasting: Time series models for demand planning

- Risk Assessment: Classification models for risk scoring

Operational Optimization

- Predictive Maintenance: Regression models for equipment monitoring

- Quality Control: Classification models for defect detection

- Resource Planning: Time series models for capacity planning

Research and Development

- Hypothesis Testing: Appropriate models for research questions

- Pattern Discovery: Clustering models for exploratory analysis

- Predictive Modeling: Regression models for outcome prediction

Understanding Recommendations

Model Characteristics

Each recommended model includes:

- Complexity: Implementation and computational complexity

- Accuracy: Expected performance level

- Interpretability: How easily results can be explained

Selection Criteria

Consider these factors when choosing models:

- Business Requirements: Accuracy vs. interpretability trade-offs

- Technical Constraints: Available computational resources

- Team Expertise: Familiarity with recommended tools and techniques

- Timeline: Available time for implementation and tuning

Frequently Asked Questions

Recommendations are based on industry best practices and data characteristics, but should be validated through experimentation.

Absolutely. Recommendations are starting points; experimentation with different approaches is encouraged.

Use the closest problem type or Auto-Detect, then adapt recommendations based on your specific requirements.

Start with the simplest model that meets your accuracy requirements, then experiment with more complex options if needed.

Related Documentation

Prepare Data for AI - ML-Ready Dataset Export

Transform Excel data into production-ready AI datasets with automated export, da...

Read DocumentationAI Data Insights - Comprehensive Dataset Analysis

Generate detailed data insights with column analysis, data types, quality metric...

Read DocumentationAI Data Validation - Quality Assessment Tool

Validate data quality for AI/ML projects with comprehensive checks for completen...

Read Documentation