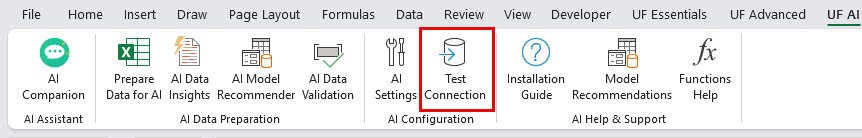

Test Connection - AI Service Connectivity Check

AI Configuration

Test Connection is a diagnostic tool that verifies connectivity between Unleashed Flow and your local Ollama AI service. It performs comprehensive connectivity checks, validates service availability, and provides detailed feedback on connection status. This essential tool helps troubleshoot AI service issues and ensures reliable AI functionality across all Unleashed Flow AI features.

How to Use

Manual Testing

- Open AI Settings: Click ⚙️ Settings in AI Companion or use ribbon menu

- Navigate to Connection Tab: Ensure you're on the Connection configuration tab

- Verify Settings: Check API URL and selected model are correct

- Click Test Connection: Press "Test Connection" button to start diagnostic

- Review Results: Check status message for connection health

- Address Issues: Follow troubleshooting guidance if problems are found

Automatic Testing

- Background Checks: System automatically tests connection before AI operations

- Error Detection: Automatic detection of connection issues during use

- Retry Logic: Built-in retry mechanism for temporary connection problems

- Status Updates: Real-time status updates in AI tools

Key Features

Comprehensive Connectivity Testing

- Service Availability: Verifies Ollama service is running and accessible

- API Endpoint Validation: Tests connection to configured API URL

- Model Accessibility: Confirms selected AI model is available and responsive

- Network Connectivity: Validates network path to AI service

- Response Time Testing: Measures connection latency and response times

Real-Time Diagnostics

- Instant Feedback: Immediate status updates during testing process

- Detailed Error Messages: Specific information about connection failures

- Status Indicators: Clear visual feedback on connection health

- Troubleshooting Guidance: Helpful suggestions for resolving issues

- Connection History: Track connection status over time

Integration Points

- AI Settings Dialog: Built-in "Test Connection" button

- Automatic Validation: Background connectivity checks during AI operations

- Error Recovery: Automatic retry logic for temporary connection issues

- Status Reporting: Connection status displayed throughout AI tools

Connection Test Process

Test Sequence

- API Endpoint Check: Verifies Ollama service is running on configured URL

- Service Response: Tests basic API response from Ollama

- Model Availability: Confirms selected model is installed and accessible

- Response Quality: Validates service can process requests properly

- Performance Check: Measures response time and service health

Test Results

Successful Connection

- Status: "✓ Connection successful"

- Details: Service running, model available, response time normal

- Action: Ready to use AI features

Connection Warnings

- Status: "⚠ Connection issues detected"

- Details: Service accessible but with performance or configuration issues

- Action: Review settings and consider optimization

Connection Failures

- Status: "❌ Connection failed"

- Details: Specific error information and troubleshooting steps

- Action: Address identified issues before using AI features

Common Connection Issues

Service Not Running

- Error: "Cannot connect to Ollama service"

- Cause: Ollama is not installed or not running

- Solution: Install Ollama from https://ollama.ai and start the service

- Verification: Check if Ollama is running in system processes

Wrong API URL

- Error: "Invalid API endpoint"

- Cause: Incorrect API URL configuration

- Solution: Verify URL is http://localhost:11434 (default) or correct custom URL

- Verification: Test URL in web browser or command line

Model Not Available

- Error: "Selected model not found"

- Cause: AI model not installed or incorrectly specified

- Solution: Install model using ollama pull [model-name] command

- Verification: Use ollama list to see installed models

Network Issues

- Error: "Network connectivity problems"

- Cause: Firewall, proxy, or network configuration blocking connection

- Solution: Check firewall settings, proxy configuration, or network connectivity

- Verification: Test network connectivity to localhost or configured server

Performance Issues

- Error: "Connection timeout" or "Slow response"

- Cause: System resources, model loading, or service overload

- Solution: Wait for model loading, close other applications, or use smaller models

- Verification: Monitor system resources and Ollama service status

Troubleshooting Guide

Step-by-Step Diagnosis

1. Verify Ollama Installation

- Check if Ollama is installed: ollama --version

- Verify service is running: Check system processes for "ollama"

- Test basic functionality: ollama list to see installed models

2. Check Network Configuration

- Verify API URL in settings (default: http://localhost:11434)

- Test URL accessibility: Open http://localhost:11434/api/tags in browser

- Check firewall settings for port 11434

3. Validate Model Installation

- List installed models: ollama list

- Install required model: ollama pull llama2 (or desired model)

- Verify model works: ollama run llama2 "test message"

4. Test System Resources

- Check available RAM (models require 4-16GB depending on size)

- Monitor CPU usage during model loading

- Close unnecessary applications if resources are limited

5. Advanced Diagnostics

- Check Ollama logs for error messages

- Verify network connectivity with ping localhost

- Test with different models to isolate issues

Common Solutions

Restart Ollama Service

# Stop Ollama ollama stop # Start Ollama ollama serve

Reinstall Model

# Remove model ollama rm llama2 # Reinstall model ollama pull llama2

Reset Configuration

- Delete settings file: %AppData%\UnleashedFlow\ai-settings.json

- Restart Excel and reconfigure AI settings

- Test connection with default settings

Best Practices

Regular Testing

- Periodic Checks: Test connection regularly to ensure continued functionality

- After Changes: Always test after modifying AI settings or installing new models

- Before Important Work: Verify connection before starting critical AI-assisted tasks

- System Updates: Test after system updates or Ollama updates

Proactive Monitoring

- Status Awareness: Pay attention to connection status indicators in AI tools

- Performance Monitoring: Watch for slow responses or timeout issues

- Resource Management: Monitor system resources when using AI features

- Error Logging: Keep track of connection issues for pattern identification

Optimization Strategies

- Model Selection: Choose appropriate models based on system capabilities

- Resource Allocation: Ensure adequate system resources for AI operations

- Network Optimization: Optimize network settings for local AI service

- Configuration Tuning: Adjust settings based on connection test results

Frequently Asked Questions

Test whenever you experience AI issues, after configuration changes, or periodically to ensure continued functionality.

Try restarting Excel, clearing conversation history, or testing with a different AI model.

Yes, configure the API URL to point to your remote server and test normally.

It may indicate model loading, system resource constraints, or network issues. Monitor system resources and consider using smaller models.

Follow the step-by-step troubleshooting guide, starting with verifying Ollama installation and service status.

Related Documentation

AI Settings - Configure Local AI Models

Configure AI settings including Ollama connection, model selection, custom promp...

Read Documentation