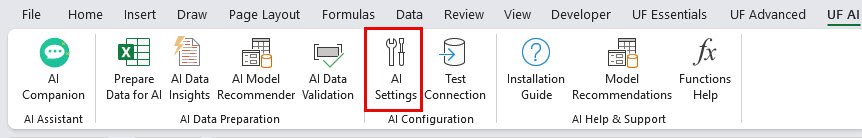

AI Settings - Configure Local AI Models

AI Configuration

AI Settings provides comprehensive configuration management for local AI integration through Ollama. This professional settings interface allows you to configure connection parameters, select AI models, customize system prompts, and optimize response settings for your specific needs. With tabbed organization and real-time validation, AI Settings ensures optimal AI performance while maintaining complete privacy through local processing.

How to Use

Initial Setup

- Open Settings: Click ⚙️ Settings button in AI Companion or use ribbon menu

- Configure Connection: Verify API URL (http://localhost:11434)

- Select Model: Choose from available models in dropdown

- Test Connection: Click "Test Connection" to verify setup

- Customize Prompts: Modify default prompt if desired

- Adjust Advanced Settings: Set token limits based on your needs

- Save Configuration: Click "Save" to apply settings

Model Selection Process

- Refresh Models: Click 🔄 to discover available models

- Choose Appropriate Model: Select based on your needs:

- llama2: General-purpose conversational AI

- codellama: Programming and technical assistance

- mistral: Efficient general-purpose model

- phi: Compact model for quick responses

- Test Selection: Use "Test Connection" to verify model works

- Save Settings: Apply configuration changes

Prompt Customization Process

- Access Prompt Tab: Switch to "Default Prompt" tab

- Review Current Prompt: Examine existing system prompt

- Customize Behavior: Modify prompt to change AI personality

- Test Changes: Use AI Companion to verify prompt works as expected

- Reset if Needed: Use Reset button to restore defaults

Settings Interface

Tabbed Configuration System

- Connection Tab: API URL, model selection, and connection testing

- Default Prompt Tab: System prompt customization and behavior configuration

- Advanced Tab: Response length controls and performance optimization

Real-Time Validation

- Connection Testing: Built-in connectivity verification

- Model Discovery: Automatic detection of available local models

- Status Feedback: Real-time status updates and error reporting

- Settings Persistence: Automatic saving to workbook and global configuration

Connection Configuration

API Connection Settings

- API URL: Ollama server endpoint (default: http://localhost:11434)

- Model Selection: Choose from available local AI models

- Model Refresh: Dynamic model list updates with 🔄 refresh button

- Connection Testing: "Test Connection" button for connectivity verification

Model Management

- Available Models: Dropdown list of installed Ollama models

- Model Types: Support for llama2, codellama, mistral, phi, and other Ollama-compatible models

- Refresh Capability: Update model list without restarting Excel

- Selection Persistence: Remember selected model across sessions

Connection Testing

- Test Connection Button: Verify connectivity to Ollama service

- Status Display: Real-time connection status and error messages

- Help Integration: Built-in help system with installation guidance

- Troubleshooting: Detailed error messages for connection issues

Prompt Customization

System Prompt Editor

- Multi-line Editor: Rich text editor for system prompt customization

- Default Behavior: Pre-configured prompt optimized for Excel assistance

- Custom Personalities: Create specialized AI assistants for different use cases

- Reset Functionality: Quick restoration to default settings with Reset button

Prompt Engineering

- Behavior Specification: Define how AI should respond to different question types

- Context Integration: Specify how AI should handle Excel data and context

- Response Style: Control formality, detail level, and explanation depth

- Domain Expertise: Configure AI for specific domains (finance, marketing, technical)

Default Prompt Template

The system includes a professional default prompt:

You are an Excel AI assistant. Help with formulas, data analysis, and spreadsheet tasks. Be concise and practical.

Advanced Settings

Response Length Controls

- Chat Response Max Tokens: Slider control (500-8000 tokens)

- Function Response Max Tokens: Slider control (100-2000 tokens)

- Real-time Display: Token count display updates as you adjust sliders

- Performance Guidance: Built-in tips for optimal token settings

Token Limit Guidelines

- 500-1000 tokens: Quick responses (~375-750 words)

- 1000-2000 tokens: Standard responses (~750-1500 words)

- 2000-4000 tokens: Comprehensive analysis (~1500-3000 words)

- 4000-8000 tokens: Extensive responses (~3000-6000 words)

Performance Optimization

- Response Speed: Lower token limits for faster responses

- Quality Balance: Higher limits for more detailed, comprehensive answers

- Memory Management: Efficient handling of conversation context

- Resource Considerations: Guidance on system resource usage

Settings Persistence

Multi-Level Configuration

- Global Settings: Stored in user AppData for system-wide defaults

- Workbook Settings: Stored in Excel workbook properties for project-specific configuration

- Priority System: Workbook settings override global settings when available

Configuration Storage

- JSON Format: Settings stored in structured JSON format

- Location: %AppData%\UnleashedFlow\ai-settings.json

- Backup: Automatic backup of configuration settings

- Portability: Settings can be shared across systems

Best Practices

Configuration Management

- Document Settings: Keep records of configuration changes and purposes

- Test Thoroughly: Always test configuration changes with representative tasks

- Backup Configurations: Keep backups of working configurations

- Version Control: Track configuration versions for different use cases

Model Selection Strategy

- Start Simple: Begin with general-purpose models like llama2

- Specialize Gradually: Move to specialized models for specific needs

- Consider Resources: Balance model capability with system resources

- Update Regularly: Keep models updated for improved performance

Performance Optimization

- Token Management: Adjust token limits based on response requirements

- Connection Stability: Ensure stable Ollama connection

- Resource Monitoring: Monitor system resources during AI operations

- Error Handling: Configure appropriate error handling and timeouts

Troubleshooting

Connection Issues

- "Cannot connect to Ollama": Verify Ollama is installed and running

- "No models available": Install models using ollama pull [model-name]

- "Connection timeout": Check firewall settings and Ollama service status

- "Invalid API URL": Verify URL format and port number

Model Issues

- "Model not found": Refresh model list or reinstall model

- "Model loading slowly": Large models take time to load initially

- "Out of memory": Use smaller models or increase system RAM

- "Inconsistent responses": Clear conversation history or restart service

Settings Issues

- "Settings not saving": Check file permissions in AppData directory

- "Configuration reset": Verify workbook properties are accessible

- "Prompt not working": Test prompt changes with simple questions first

Integration with AI Tools

Workflow Integration

AI Settings provides the foundation for all AI tools:

- AI Companion: Uses connection and prompt settings

- AI Data Tools: Leverage model selection and performance settings

- UDF Functions: Use function token limits and model configuration

Consistent Configuration

- Unified Settings: All AI tools use the same configuration

- Seamless Experience: Settings apply across all AI features

- Centralized Management: Single location for all AI configuration

Frequently Asked Questions

Start with llama2 for general tasks, use codellama for technical assistance, or mistral for balanced performance.

Use Ollama command line: ollama pull [model-name], then refresh the model list in settings.

Yes, workbook-specific settings override global settings automatically.

AI features will show connection errors. Start Ollama service and test connection in settings.

Use the Reset button in the Default Prompt tab, or delete the settings file and restart Excel.

Related Documentation

Test Connection - AI Service Connectivity Check

Verify AI service connectivity and troubleshoot connection issues with comprehen...

Read Documentation